Child Sexual Abuse Material (CSAM) is the documentation of horrific child sexual abuse. In 2022 alone, the National Center for Missing and Exploited Children’s (NCMEC) CyberTipline received more than 32 million reports of suspected child sexual exploitation. The viral spread of CSAM is an exponential problem that we at Thorn and others in the child safety ecosystem are working tirelessly to address.

New CSAM is produced and uploaded to online platforms every day and often depicts a child who is being actively abused. CSAM who goes not detected poses significant risks for widespread sharing on the web, contributing to the revictimization of the child in the material.

Unfortunately, some online platforms do not proactively detect CSAM content and rely solely on user reports. Other platforms that detect can only find existing CSAMs. Thorn’s CSAM classifier is unique in that it detects unknown CSAMs — that is, material that already existed but was not yet classified as CSAM.

As you can imagine, the sheer volume of CSAMs to review and evaluate far outweighs the number of human moderators and hours in the day. So how do we solve this problem?

In order to help find and rescue child sexual abuse victims in this material, we need to use a heavy duty tool set including classifiers.

What exactly is a classifier?

Classifiers are algorithms that use machine learning to automatically sort data into categories.

For example, when an email arrives in your spam folder, there is a classifier at work.

It was trained on data to determine which emails are most likely to be spam and which are not. As he gets more of these emails and users keep telling him whether he is right or not, he gets better and better at sorting them out.

The power these classifiers unlock is the ability to label new data using what they’ve learned from historical data – in this case, to predict whether new emails are likely to be spam.

How does the Thorn CSAM classifier work?

How does the Thorn CSAM classifier work?

Thorn’s CSAM Classifier is an amazing machine learning based tool that can find new or unknown CSAMs in images and videos. He was trained on known CSAMs. When the potential CSAM is flagged for review by the moderator and the moderator confirms whether or not it is a CSAM, the classifier learns. He continuously improving of this feedback loop so that it can get even smarter to detect new materials.

Here’s how different partners in the child protection ecosystem are using this technology:

- Law enforcement can identify victims faster as the classifier elevates unknown CSAM images and videos during investigations.

- NGO can help identify victims and connect them to support resources faster.

- Online platforms can extend detection capabilities and expand discovery of previously unseen or unreported CSAMs by deploying the classifier when using Safer, our all-in-one CSAM detection solution.

As mentioned earlier, some online platforms do not proactively detect CSAM content and rely solely on user reports. Other platforms use hash and match, which cannot find existing CSAM. That’s why Thorn’s technology is a game-changer — we built a CSAM classifier to detect unknown CSAM.

As mentioned earlier, some online platforms do not proactively detect CSAM content and rely solely on user reports. Other platforms use hash and match, which cannot find existing CSAM. That’s why Thorn’s technology is a game-changer — we built a CSAM classifier to detect unknown CSAM.

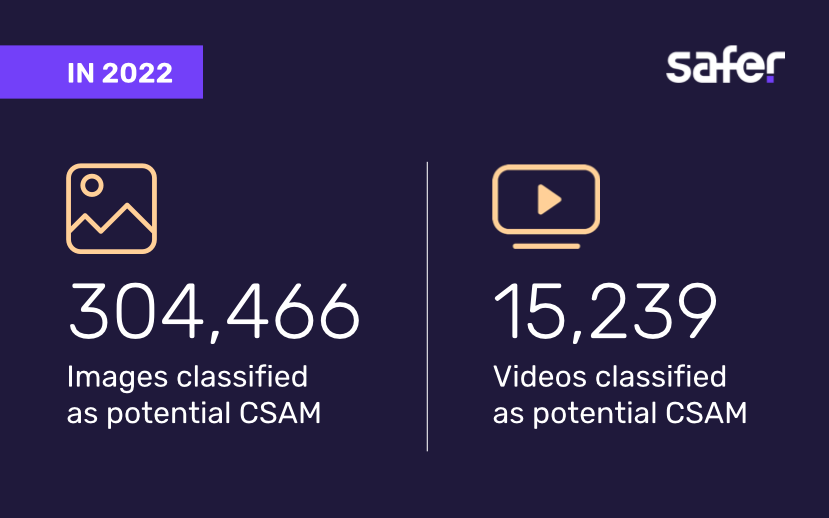

Saferour all-in-one solution for CSAM detection, combines advanced AI technology with self-hosted deployment to detect, investigate and report CSAM at scale. In 2022, Safer had a significant impact for our customers, with 304,466 images classified as potential CSAM and 15,238 videos classified as potential CSAM.

How does this technology help real people?

How does this technology help real people?

Finding new and unfamiliar CSAMs often relies on manual processes that place a burden on human reviewers or user reports. To put things into perspective, you would need a team of hundreds of people with unlimited hours to achieve what a CSAM classifier can do through automation.

Because the new CSAM may represent a child who is being actively abused, the use of CSAM classifiers may dramatically reduce the time it takes to find a victim and keep them away from evil.

A Flickr success story

A Flickr success story

Actually image and video hosting site Flickr uses Thorn’s CSAM Classifier to help their reviewers sort through the mountain of new content that is uploaded to their site every day.

As Flickr Head of Trust and Safety Jace Pomales summed it up, “We don’t have a million bodies to throw away to fix this, so having the right tooling is really important to us.”

A recent classifier success led to the discovery of 2,000 previously unknown CSAM images. Once reported to NCMEClaw enforcement conducted an investigation and a child was saved from active abuse. It is the power of this technology that changes lives.

Technology must be part of the solution if we are to stay ahead of the threats children face in a rapidly changing world. Whether through our products or our programs, we embrace the latest tools and expertise to make the world safer so that every child can simply be a child. It is thanks to our generous supporters and donors that our work is possible. Thank you for believing in this important mission.

If you work in the technology industry and want to use Safer and the CSAM classifier for your online platform, please contact info@safer.io. If you work in law enforcement, you can contact info@thorn.org or fill this application.